I'm a skilled data engineer who loves diving into technical challenges and building robust, scalable solutions. I have a strong background in cloud architecture and a knack for designing and implementing data solutions that directly benefit the business. My goal is to transform complex data problems into clear, actionable insights. I'm also passionate about emerging technologies, especially AI and micro-robotics. I'm always exploring how to integrate these innovations into my work to drive efficiency and push boundaries forward. Beyond the technical side, I believe in the power of collaboration and enjoy working with diverse teams. I also find fulfillment in sharing knowledge and I'm always open to connecting with others in the field. When I'm not coding, you can find me reading sci-fi books, hiking or doing volunteer work.

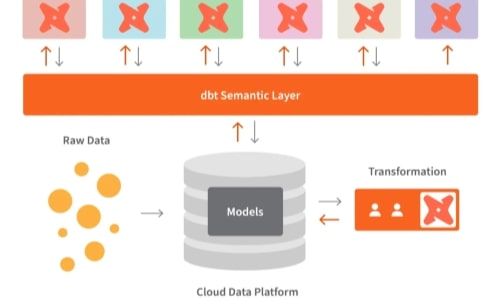

I designed and implemented an Enterprise Data Management layer to serve as a single, application-agnostic source of truth for all key business metrics. This initiative fostered a cross-functional partnership with teams across domains, breaking down data silos by defining and modeling a unified data set.

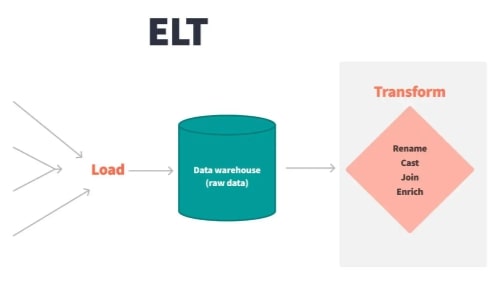

I leveraged DBT to transform and model data for OutSystems applications, using a combination of audit and edit tables. These models were materialized in EDB, ensuring efficient data tracking and a smooth integration.

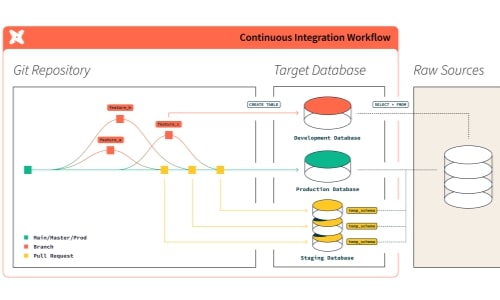

I implemented a Git-based release strategy to ensure consistent DBT builds and tests across multiple environments using CI/CD pipelines. This streamlined deployments and improved model reliability.

I implemented a custom deployment for a scalable on-prem data twin, using Portainer to manage Dockerized services. Trino and EDB powered high-performance querying & storage, with CI/CD ensuring seamless updates.

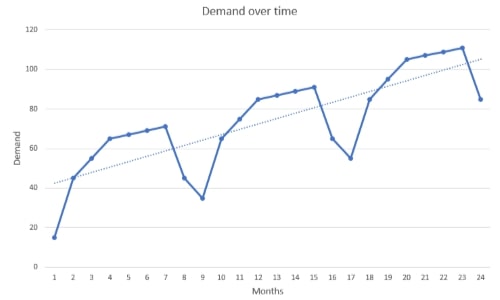

I developed multiple forecasting models to predict demand from historic order data, leveraging statistical techniques and Pandas for data processing. Insights were presented with clear visualizations and storytelling.

I designed a standardized deployment process for web applications in AWS, using Terraform for infrastructure as code and CI/CD pipelines for automated provisioning and updates. Backed by a self-made Terraform module.

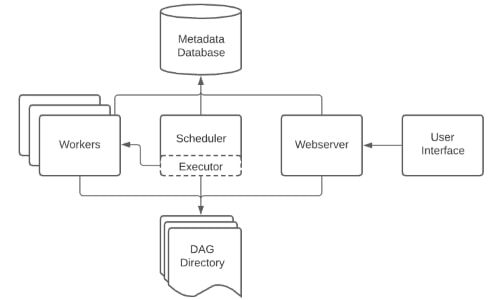

I deployed Apache Airflow and developed custom operators for database integration, ETL on a custom engine and Tableau integration (using Python), streamlining complex data workflows and automation in graphs.

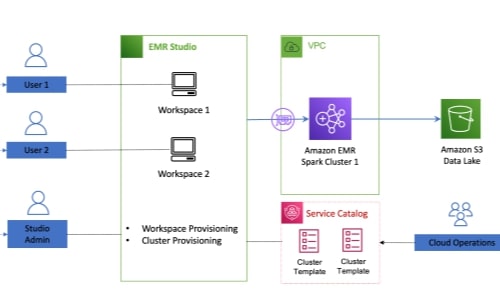

I led the rollout of EMR Studio to support Python Notebooks, utilizing AWS CloudFormation for infrastructure automation. This enabled scalable, cost-efficient processing with Apache Spark/ Python for different teams.

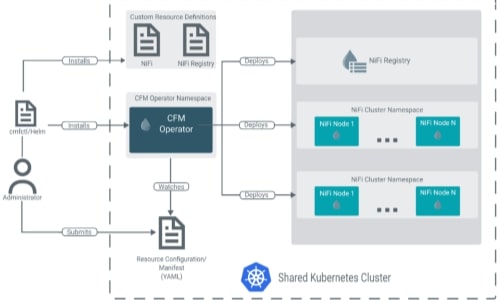

I built a cost-efficient data refresh engine using Apache NiFi and Spark, writing custom Spark jobs and Python scripts for scheduling and database interfacing. I also modeled tracking tables to monitor refresh statuses.

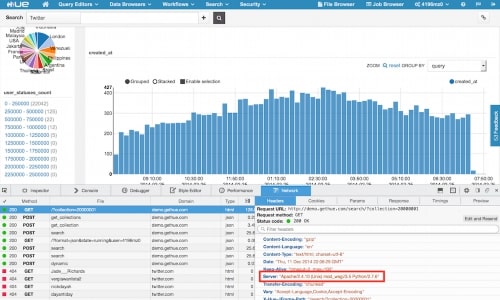

I deployed Apache Hue on AWS EMR to establish a standard data governance environment, enabling efficient data exploration and management. Custom EMR steps and Bash scripts were used to automate the deployment.

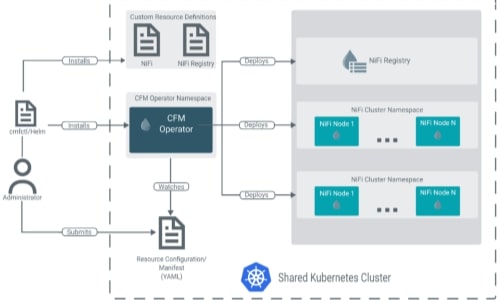

I built a data lake Kubernetes cluster on AWS EKS, integrating NiFi, Airflow, S3 configuration and other modules. Terraform was used for automation, enabling seamless deployment of the data ecosystem.

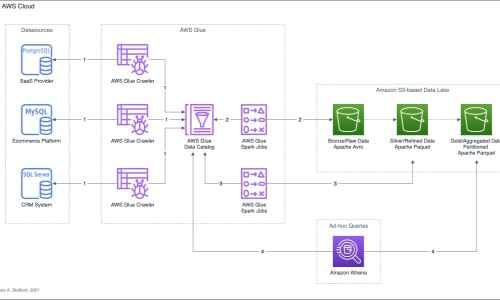

The ANPR Big Data Platform is a scalable and extensible platform for collecting, storing and aggregating ANPR data. I constructed an AWS-native big data lake for analysis, reporting and future processing for other teams.

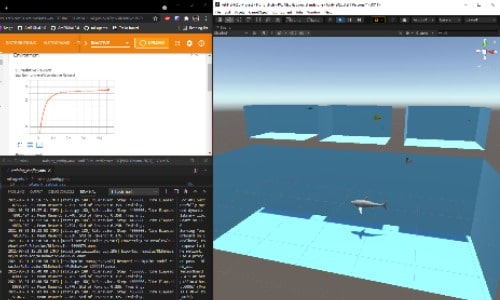

ArtiFISHal Intelligence consists of scenes made in Unity which illustrate several reinforcement learning techniques. It also contains a simulation of a marine ecosystem which uses evolutionary algorithms.

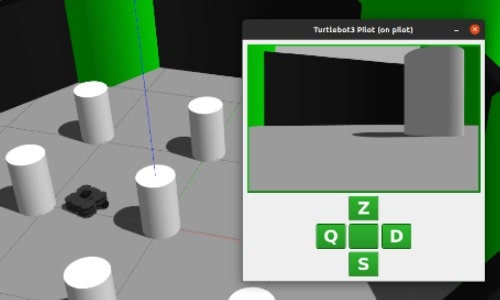

The Turtlebot3 was simulated in Gazebo, using the ROS2 framework. The Turtlebot3 received positive rewards upon executing beneficial actions (using QLearn). The agent was eventually trained to race on a 3D-track.

Sierteelt addresses a problem in floriculture: human influence & bias. An art exhibition was set up, with a Generative Adversarial Network (GAN) as a central piece. It generated plant-hand-like hybrids using a web-cam.